Introduction

The OU-ISIR Gait Database, Multi-View Large Population Dataset with Dense Optical Flow (OUMVLP-OF) is meant to aid research efforts in the general area of developing, testing and evaluating algorithms for optical flow-based gait analysis. The Institute of Scientific and Industrial Research (ISIR), SANKEN, Osaka University (OU) has copyright in the collection of gait video and associated data and serves as a distributor of the OUMVLP-OF dataset.

Existing gait databases provide binary silhouettes and the gait energy image (GEI) as the main hand crafted features for the appearance-based gait recogniition systems. However, GEI is still is sensitive to some variations, such as camera viewpoint and carrying conditions.

Moreover, GEI image can represent the silhouette sequence into one image or gait template and cannot effectively represent the temporal information. In order to address the limitations associated GEI and to incorporate the instantaneous motion direction and intensity into the original gait features, We propose the OUMVLP-OF to considers a more informative spatio-temporal gait features by providing the 2D dense optical flow images. We use the promising RNN-based optical flow estimator, i.e., RAFT, which achieves the SOTA performance and has strong cross-dataset generalization. RAFT estimator can extract the per-pixel features, build multi-scale 4D correlation volumes for all pairs of pixels, and iteratively update a flow field through a recurrent unit that performs lookups on the correlation volumes.

In addition, we evaluate various approaches to gait recognition which are robust against view a The SMPL model is complex and informative, making it conducive to promoting the development of gait recognition systems and boosting the recognition performance. Besides gait recognition, OUMVLP-OF can also be used for other gait analyses (e.g., aging progression/regression, training data as genuine gait models for adversarial learning). If you use this dataset please cite the following paper:

- A. Shehata, F. M. Castro, N. Guil, M. J. Marín-Jimrénez and Y. Yagi, "OUMVLP-OF: Multi-View Large Population Gait Database With Dense Optical Flow and Its Performance Evaluation," in IEEE Access, vol. 13, pp. 87100-87111, 2025. [Bib]

Dataset

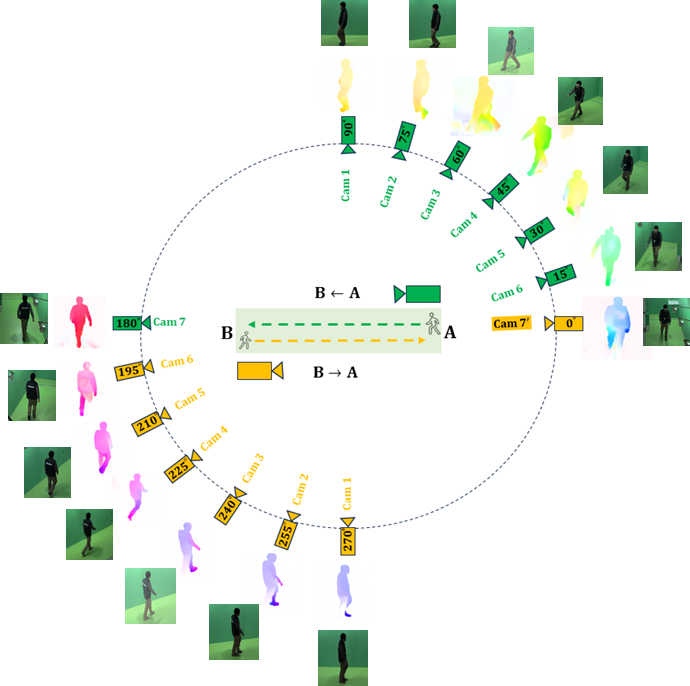

Built upon OU-MVLP, OUMVLP-OF contains 10,307 subjects with up to 14 viewing angles (from 0° to 90°, 180° to 270° at 15° intervals). The raw images are captured at a resolution of 1280 x 980 pixels at 25 fps under the camera setup shown in Fig. 1. There are seven network cameras placed at 15° intervals along a quarter of a circle. The subjects walk from location A to location B and back to location A, producing 14 view sequences.

Fig.1: Camera setup and the estimated 2D dense opitcal flow from multiple views.

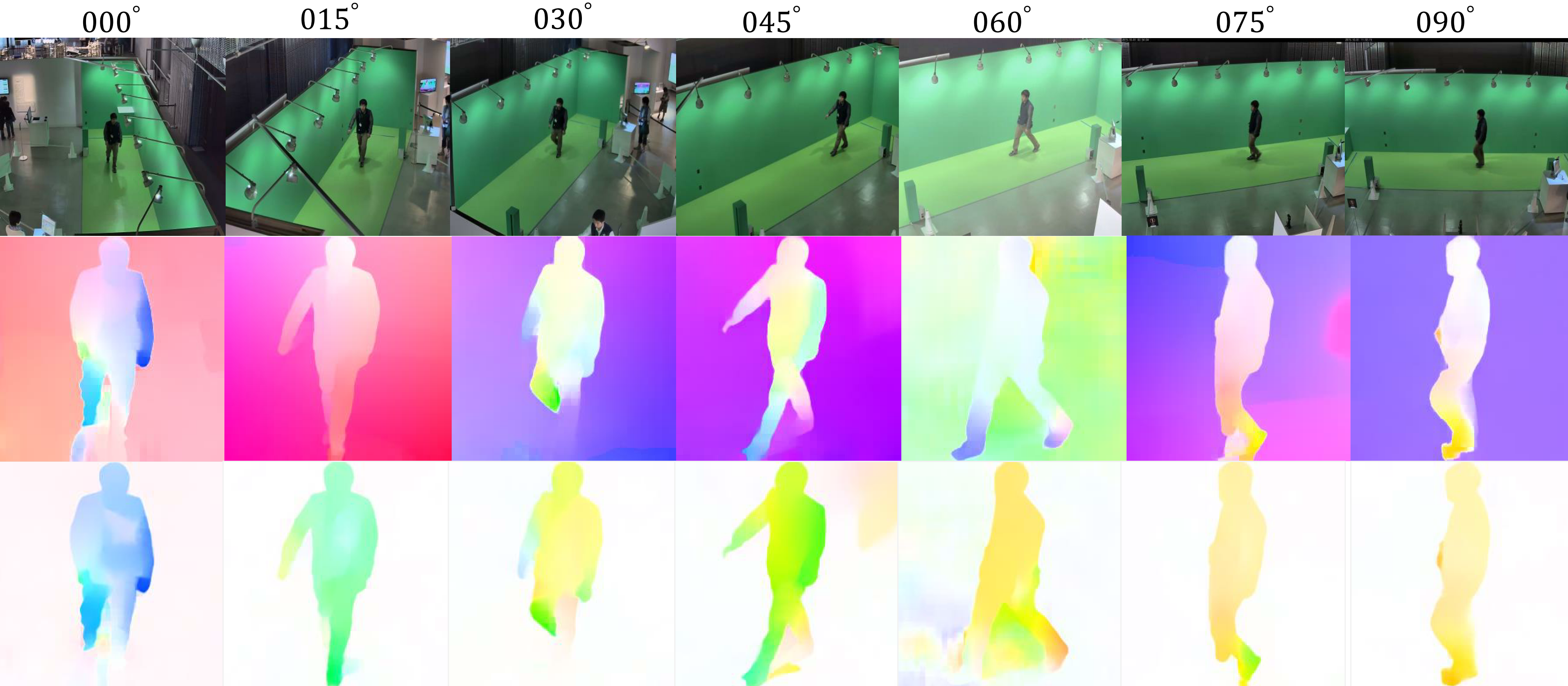

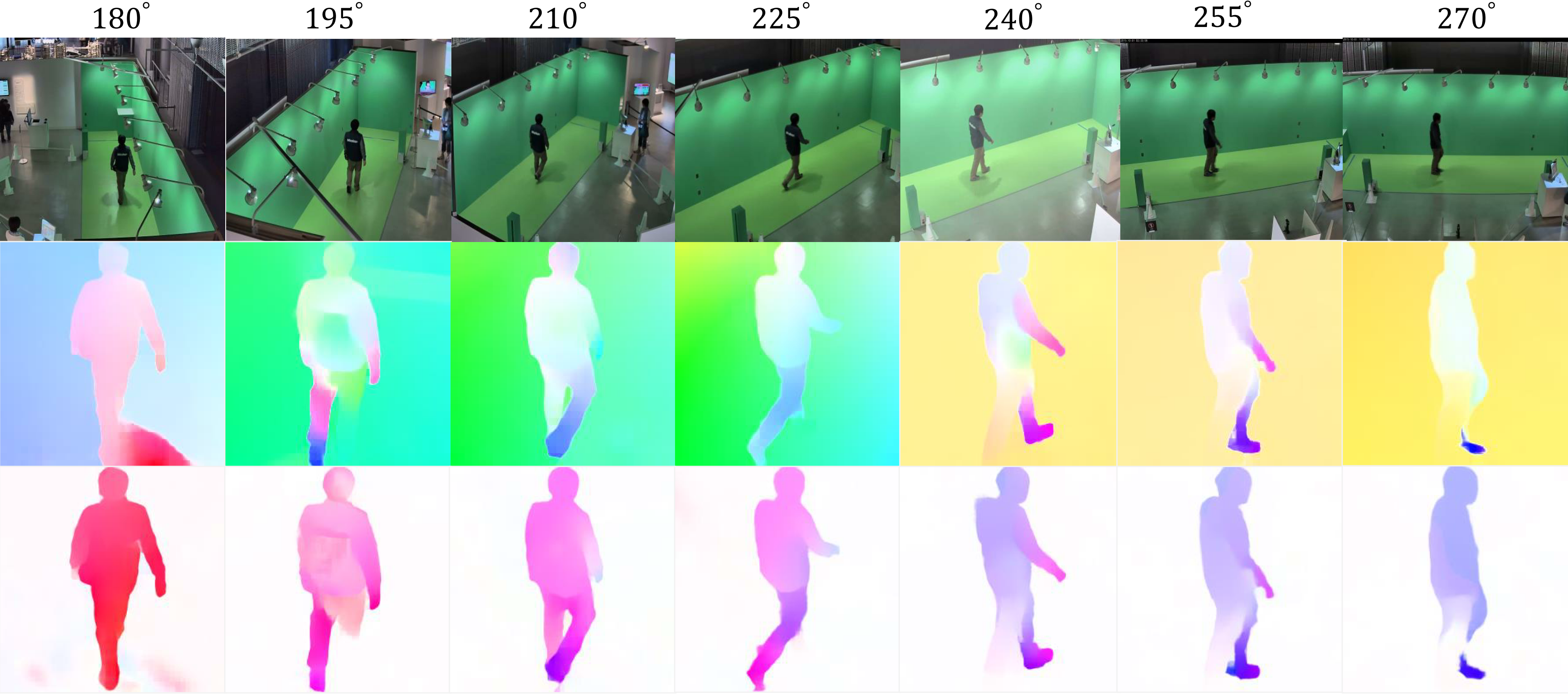

The dataset has two versions, OUMVLP-OF(V1) and OUMVLP-OF(V2). For V1, we used the correspoding binary silhouettes as masks then cropped the walking person of interest using the estiamted boundingboxes. Afterward, we centered, aligned , and resized the corresponding cropped RGB image to 256x256 resolution. Finally the RAFT optical flow estimator is applied on the resized RGB sequences. For V2, the RAFT opitcal flow firstly estimated from the RGB sequence at the original resolution 1280 x 980 and then used the correspoding binary silhouttees to mask the background and keep only the estimate opitcal flow of the forgorund pixels. Final we apply the same steps of V1 on the estimatd opitcal flow to get the cropped 256x256 2D opitcal flow image.

Fig.2: Examples of estimate optical flow from 0°-90° views. The first row is the original RGB sequences; the second, third, rows are the corresponding optical flow with the raw bacgorund and the masked background, respectively.

Fig.3: Examples of estimate optical flow from 180°-270° views. The first row is the original RGB sequences; the second, third, rows are the corresponding optical flow with the raw bacgorund and the masked background, respectively.

The number of frames in a sequence is from 18 to 35, and most of the sequences contain approximately 25 frames.

How to get the dataset?

To advance the state-of-the-art in gait-based applications, this dataset including the 2D dense opiotcal flow image sequences saved into lossless .mp4 video along with the subject ID list of training and testing set could be downloaded as compressed files in .7z foramt with password protection. The password will be issued on a case-by-case basis. To receive the password, the requestor must send the release agreement signed by a legal representative of your institution (e.g., your supervisor if you are a student) to the database administrator by mail, e-mail, or FAX.How to get the 2D optical flow sequences?

Download the Dataset

The database administrator

Department of Intelligent Media, The Institute of Scientific and Industrial Research, Osaka UniversityAddress: 8-1 Mihogaoka, Ibaraki, Osaka, 567-0047, JAPAN

FAX: +81-6-6877-4375.