Introduction

The OU-ISIR Large Population Gait Database with real-life carried object (OU-LP-Bag) is meant to aid research efforts in the area of developing, testing and evaluating algorithms for vision-based gait recognition with carried objects (COs) covariate along with detection and classification of COs where a COs is being carried. The dataset includes background-subtracted image sequences and associated size-normalized (i.e., 88x128) GEIs, The Institute of Scientific and Industrial Research (ISIR), Osaka University (OU) has copyright in the collection of gait video and associated data and serves as a distributor of the OU-ISIR Gait Database. If you use this dataset please cite the following paper:

- M.Z. Uddin, T.T. Ngo, Y. Makihara, N. Takemura, X. Li, D. Muramatsu, Y. Yagi, "The OU-ISIR Large Population Gait Database with Real-Life Carried Object and its performance evaluation,'' IPSJ Trans. on Computer Vision and Applications, Vol. 10, No. 5, pp. 1-11, May., 2018. [PDF] [Bib]

Data capture

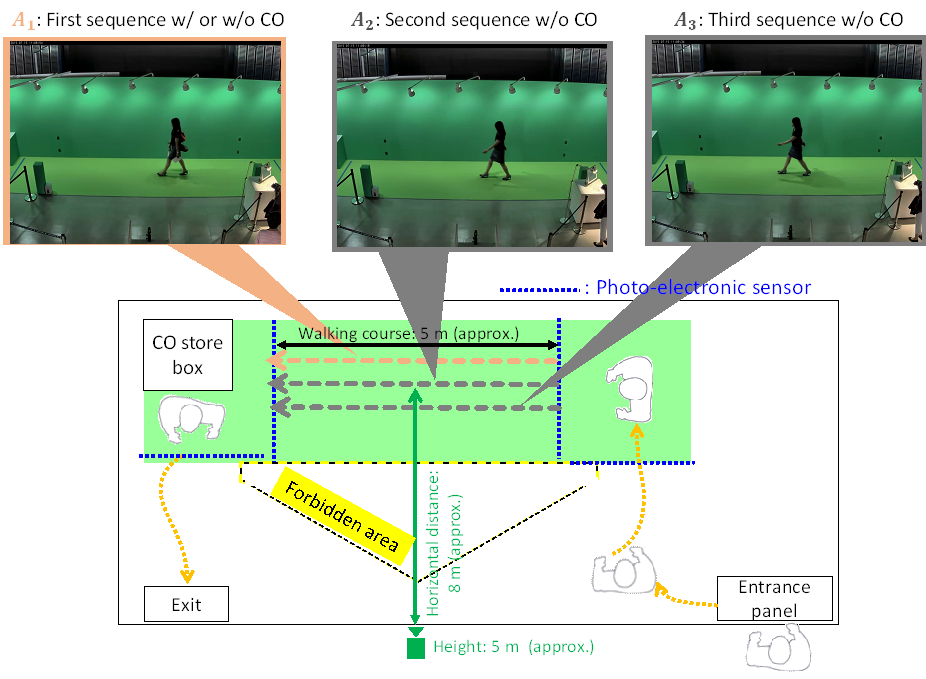

The gait data were collected in conjunction with an experience-based demonstration of video-based gait analysis at a science museum (Miraikan), and informed consent for purpose of research use was obtained electronically. The dataset consists of 62,528 subjects (with age ranging from 2 to 95 years) The camera was set at a distance of approximately 8 m from the straight walking course and a height of approximately 5 m. The image resolution and frame rate were 1280 x 980 pixels and 25 fps, respectively. Each subject was asked to walk straight three times at his/her preferred speed. The first sequence with or without COs (if he/she did not have COs) is called the A1 sequence, and the second and third sequences without COs are called A2 and A3 sequences, respectively. An overview of the capture system is illustrated in the Fig. 1.

Fig.1: Illustration of the data collection system

Annotation of the carrying status

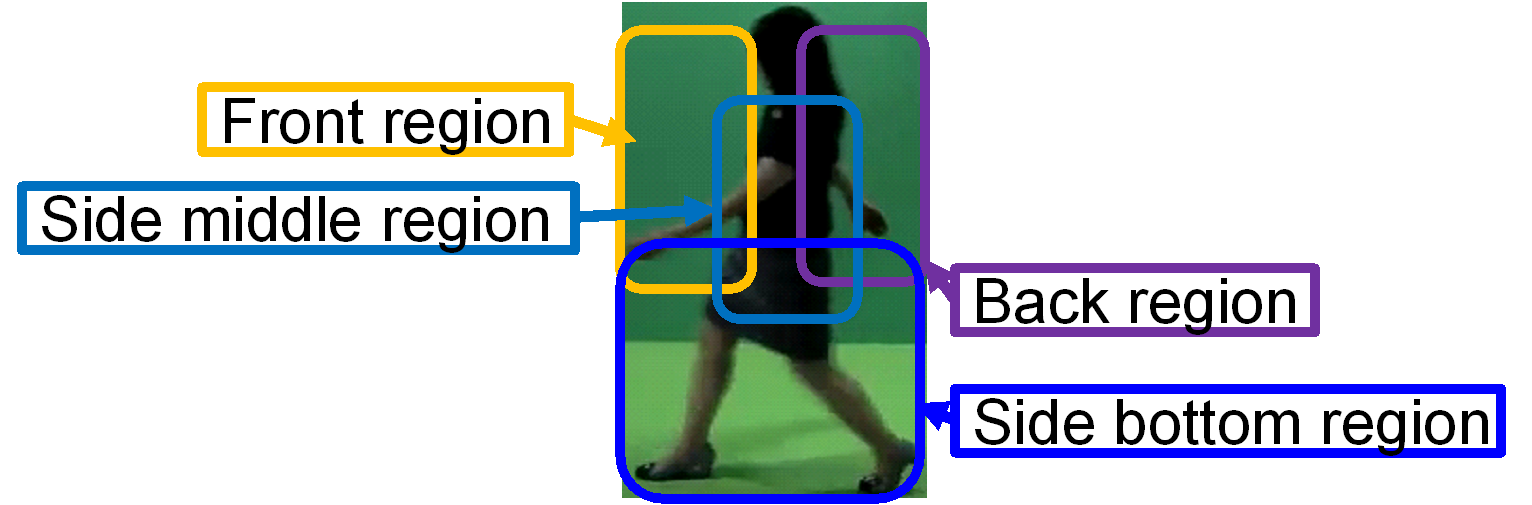

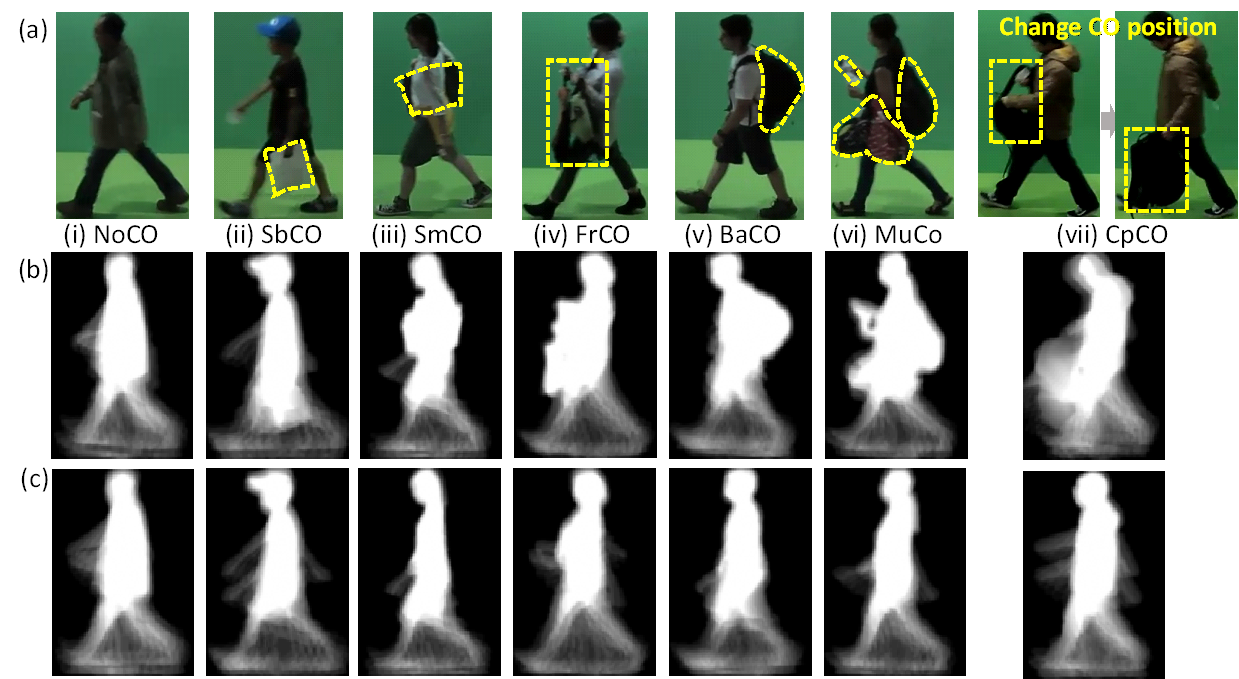

Each subject was manually divided the area in which the COs could be carried into four regions with respect to the human body: side bottom, side middle, front, and back, as shown in Fig. 2. However, some subjects did not carry a CO, some carried multiple COs in multiple regions, and others changed a CO’s position within a GEI gait period. As a result, a total of seven distinct labels for the carrying status (CS) were annotated in this database, some examples of CS labels in Fig. 3.

Fig. 2: Four approximating regions for a person in which a CO is being carried.

Fig. 3: Examples of CS labels: (a) sample RGB image within a gait period with COs (circled in yellow) in their A1 sequence; (b) corresponding GEI feature; (c) GEI feature of the same subject without a CO in another captured sequence (A2 or A3).

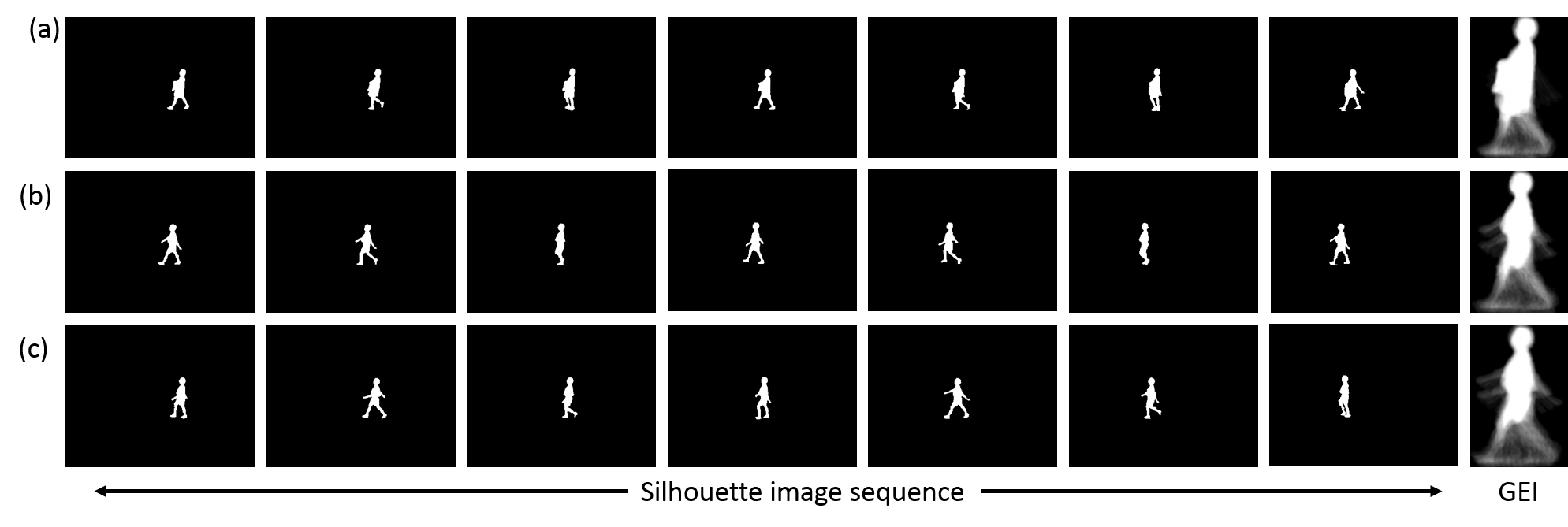

Feature generation

A silhouette image sequence of a subject was extracted using a chroma-key technique and then, registration and size normalization of the silhouette images were performed. A GEI was constructed by averaging the subject’s silhouette image sequence over a centered gait period.

How to get the dataset?

To advance the state-of-the-art in gait-based application, this dataset including a set of size-normalized GEI, silhouette sequences, subject ID list with CS labels and silhouette frame list (frames used for generating GEI) could be downloaded as a zip file with password protection and the password will be issued on a case-by-case basis. To receive the password, the requestor must send the release agreement signed by a legal representative of your institution (e.g., your supervisor if you are a student) to the database administrator by mail, e-mail, or FAX.- Release agreement

- Dataset

- GEI_all_subject ( GEI/subject/{sequence}.png )

- Silhouette (Silhouette/subject/sequence/{frame}.png )

- Subject_00001-04000

- Subject_04001-08000

- Subject_08001-12000

- Subject_12001-16000

- Subject_16001-20000

- Subject_20001-24000

- Subject_24001-28000

- Subject_28001-32000

- Subject_32001-36000

- Subject_36001-40000

- Subject_40001-44000

- Subject_44001-48000

- Subject_48001-52000

- Subject_52001-56000

- Subject_56001-60000

- Subject_60001-62528

- Subject list (Subject id list with sequence and CS label)

- Silhouette list (Subject id list with frame no. to make GEI)

- Subject list with age and gender (For intersection data set with OULP-Age)

- Training subject list (For intersection data set with OULP-Age)

- Test subject list (For intersection data set with OULP-Age)

The database administrator

Department of Intelligent Media, The Institute of Scientific and Industrial Research, Osaka UniversityAddress: 8-1 Mihogaoka, Ibaraki, Osaka, 567-0047, JAPAN

FAX: +81-6-6877-4375.