Robotics

From robot navigation and multimedia information acquisition by remote robot

| Development of a Networked Robotic System for Disaster Mitigation |

|

|

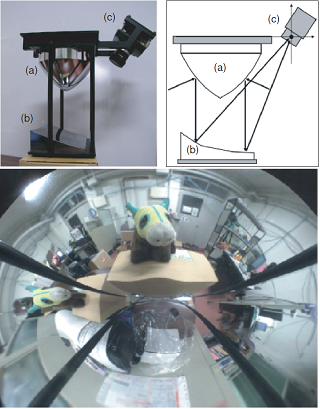

The Horizontal fixed viewpoints Biconical Paraboloidal:HBP mirror is an anisotropic convex mirror that has inhomogeneous angular resolution about azimuth angle. In this paper, we propose a remote surveillance system with a specially designed HBP mirror system for collecting information of devastated areas. We used a virtual remote surveillance environment for investigating effectiveness of the HBP mirror system compared with conventional omnidirectional imaging systems. From results of object searching experiments, we confirmed that objects can be detected early and with certainty by the HBP mirror system. We also developed a real remote surveillance system for actual surveillance experiments. Through those two types of experiments, we got the results that the HBP mirror system effectively works for remote surveillance. |

|

|

| Non-isotropic Omnidirectional Imaging System for an Autonomous Mobile Robot |

|

|

A real-time omnidirectional imaging system that can acquire an omnidirectional field of view at video rate using a convex mirror was applied to a variety of conditions. The imaging system consists of an isotropic convex mirror and a camera pointing vertically toward the mirror with its optical axis aligned with the mirror's optical axis. Because of these optics, angular resolution is independent of the azimuth angle. However, it is important for a mobile robot to find and avoid obstacles in its path. We consider that angular resolution in the direction of the robot's moving needs higher resolution than that of its lateral view. In this paper, we propose a non-isotropic omnidirectional imaging system for navigating a mobile robot. |

|

|

| Real Time 3D Environment Modeling for a Mobile Robot by Aligning Range Image Sequences |

|

|

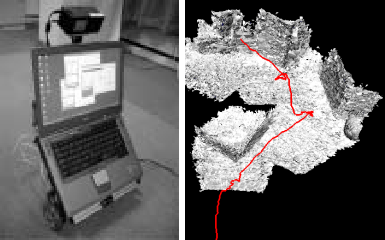

This paper describes real time 3D modeling of the environment for a mobile robot. A real time laser range finder is mounted on the robot, and obtains a range image sequence of the environment while moving around. In this paper, we detail our method that accomplished simultaneous localization and 3D modeling by aligning the acquired range images. The method incrementally aligns range images in real time by a variant of the iterative closest point (ICP) method. By estimating the uncertainty of range measurements, we introduce a new weighting scheme into the aligning framework. In an experiment, we first evaluate the accuracy of the localization results by aligning range images. Second, we show the results of modeling and localization when a robot moves along a meandering path. Finally, we summarize the conditions and limitations required of the robot's motion and the environment for our method to work well. |

|

|