Pattern Recognition and Image Understanding

Gait-based person identification

| Special instruction:

Gait Database OU-ISIR Gait Database is published. |

| The Optimal Camera Arrangement by a Performance Model for Gait Recognition |

|

|

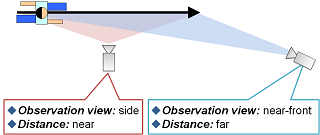

Recently, many gait recognition algorithms are proposed, and the optimal camera arrangement is necessary to maximize the performance. In this paper, we propose the optimal camera arrangement by using a performance model considering observation conditions comprehensively. We select silhouette resolution, observation view, and its local and global changes as the observation conditions affecting the performance. Then, training sets composed of pairs of the observation conditions and the performance is obtained by gait recognition experiments under several camera arrangements. A performance model is constructed by applying Gaussian Processes Regression to the training set. The optimal arrangement is determined by estimating the performance for each camera arrangement with the performance model. Experiments of performance estimation with a training set including 17 subjects and the optimal camera arrangement demonstrate the effectiveness of the proposed method. |

|

|

| Gait Analysis of Gender and Age using a Large-scale Multi-view Gait Database |

|

|

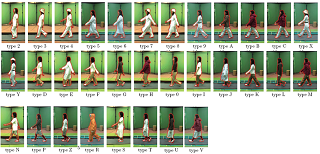

This paper describes video-based gait feature analysis for gender and age classification using a large-scale multi-view gait database. First, we constructed a large-scale multi-view gait database in terms of the number of subjects (168 people), the diversity of gender and age (88 males and 80 females between 4 and 75 years old), and the number of observed views (25 views) using a multi-view synchronous gait capturing system. Next, classification experiments with four classes, namely children, adult males, adult females, and the elderly were conducted to clarify view impact on classification performance. Finally, we analyzed the uniqueness of the gait features for each class for several typical views to acquire insight into gait differences among genders and age classes from a computer-vision point of view. In addition to insights consistent with previous works, we also obtained novel insights into view-dependent gait feature differences among gender and age classes as a result of the analysis. |

|

|

| Performance Evaluation of Vision-based Gait Recognition using a Very Large-scale Gait Database |

|

|

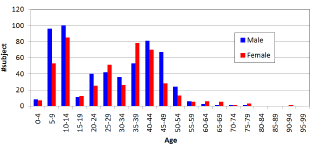

This paper describes the construction of the largest gait database in the world and its application to a statistically reliable performance evaluation of vision-based gait recognition. Whereas existing gait databases include at most an order of a hundred subjects, we construct an even larger gait database which includes 1,035 subjects (569 males and 466 females) with ages ranging from 2 to 94 years. Because a sufficient number of subjects for each gender and age group are included in this very large-scale gait database, we can analyze the dependence of gait recognition performance on gender or age groups. The results of GEI-based gait recognition provide several novel insights, such as the tradeoff of gait recognition performance among age groups derived from the maturity of walking ability and physical strength. Moreover, improvement in the statistical reliability of performance evaluation is shown by comparing the gait recognition results for the whole set and subsets of a hundred subjects selected randomly from the whole set. |

|

|

| Cluster-Pairwise Discriminant Analysis |

|

|

Pattern recognition problems often suffer from the larger intra-class variation due to situation variations such as pose, walking speed, and clothing variations in gait recognition. This paper describes a method of discriminant subspace analysis focused on a situation cluster pair. In a training phase, both a situation cluster discriminant subspace and class discriminant subspaces for the situation cluster pair are constructed by using training samples of non recognition-target classes. In testing phase, given a matching pair of patterns of recognition-target classes, posteriors of the situation cluster pairs are estimated at first, and then the distance is calculated in the corresponding cluster-pairwirse class discriminant subspace. The experiments both with simulation data and real data show the effectiveness of the proposed method. |

|

|

| Adaptive Acceptance Threshold Control for ROC Curve Optimization |

|

|

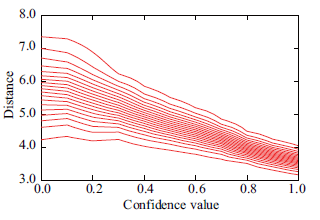

In two-class classification problems such as one-to-one verification and object detection, the performance is usually evaluated by a so-called Receiver Operating Characteristics (ROC) curve expressing a tradeoff between False Rejection Rate (FRR) and False Acceptance Rate (FAR). On the other hand, it is also well known that the performance is significantly affected by the situation differences between enrollment and test phases. This paper describes a method to adaptively control an acceptance threshold with confidence values derived from situation differences so as to optimize the ROC curve. We show that the optimal evolution of the adaptive threshold in the domain of the distance and confidence value is equivalent to a constant evolution in the domain of the error gradient defined as a ratio of a total error rate to a total acceptance rate. An experiment with simulation and real data demonstrates the effectiveness of the proposed method, particularly under a lower FAR or FRR tolerance condition. |

|

|

| Gait Identification for Low Frame-rate Videos |

|

|

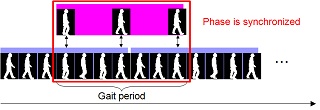

Gait analyses have recently gained attention as methods of identification for wide-area surveillance and criminal investigation. Generally surveillance camera videos are taken by low spatio-temporal resolution because of limitation on communication band and storage device. Therefore it is difficult to apply existing gait identification methods to low spatio-temporal resolution videos. Period-based gait trajectory matching in eigen space using phase synchronization. A gait can be taken as a trajectory in eigen space and phase synchronization is done by time stretching and time shifting. In addition, with considering fluctuation among gait sequence, robust matching is done by statistical procedure on period-based matching result. In addition, statistical procedures on period-based matching results make robust matching for fluctuation among gait sequence. Experiments about performance assessment on spatio-temporal resolution demonstrate the effectiveness of the proposed method. |

|

|

| Online Gait Measurement for Ditigal Entertainment |

|

|

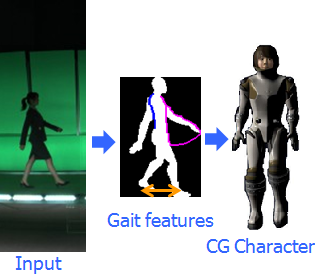

This paper presents a method to measure online the gait features from the gait silhouette images and reflect the gait features to CG characters for an audience-participation digital entertainment. First, both static and dynamic gait features are extracted from the silhouette images captured by the online gait measurement system with two cameras and a chroma-key background. Then, Standard Gait Models (SGMs) with various types of gait features are constructed and stored, which are composed of a pair of CG characters’ rendering parameters and synthesized silhouette images. Finally, blend ratios of the SGMs are estimated to minimize gait feature errors between the blended model and the online measurement. In an experiment, a gait database with 100 subjects is used for gait feature analysis and it is confirmed that the measured gait features are reflected to the CG character effectually. |

|

|

| Clothing-invariant Gait Identification |

|

|

Variations in clothing alter an individual's appearance, making the problem of gait identification much more difficult. If the type of clothing differs in a gallery and a probe, certain parts of the silhouettes are likely to change and the ability to discriminate subjects decreases with respect to these parts. A part-based approach, therefore, has the potential for selecting the appropriate parts. This paper proposes a method for part-based gait identification in light of substantial clothing variations. We divide the human body into 8 sections, including 4 overlapping ones, since the larger parts have a higher discrimination capability while the smaller parts are more likely to be unaffected by clothing variations. Furthermore, as there are certain clothes that are common to different parts, we present a categorization for items of clothing to group similar clothes. Next, we exploit the discrimination capability as a matching weight for each part and control the weights adaptively based on a distribution of distances between the probe and all the galleries. The results of the experiments using our large-scale gait dataset with clothing variations show that the proposed method achieves far better performance than other approaches. |

|

|

| Speed-invariant Gait Identification |

|

|

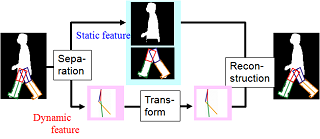

We propose a method of gait silhouette transformation from one speed to another to cope with walking speed changes in gait identification. Firstly, static and dynamic features are divided from gait silhouettes using a human model. Secondly, a speed transformation model is created using a training set of dynamic features for multiple persons on multiple speeds. This model can transform dynamic features from a reference speed to another arbitrary speed. Finally, silhouettes are restored by combining the divided static features and the transformed dynamic features. Evaluation by gait identification using frequency-domain features shows the effectiveness of the proposed method. |

|

|

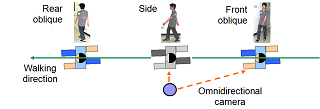

| Multi-view Gait Identification using an Omnidirectional Camera |

|

|

We propose a method of gait identification based on multi-view gait images using an omnidirectional camera. We first transform omnidirectional silhouette images into panoramic ones and obtain a spatio-temporal gait silhouette volume (GSV). Next, we extract frequency-domain features by Fourier analysis based on gait periods estimated by autocorrelation of the GSVs. Because the omnidirectional camera provides a change of observation views, multi-view features can be extracted from parts of GSV corresponding to basis views. In an identification phase, distance between a probe and a gallery feature of the same view is calculated, and then these for all views are integrated for matching. Experiments of gait identification including 21 subjects from 5 views demonstrate the effectiveness of the proposed method. |

|

|

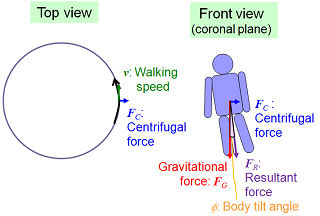

| Gait Identification Considering Body Tilt by Walking Direction Changes |

|

|

Gait identification has recently gained attention as a method of identifying individuals at a distance. Thought most of the previous works mainly treated straight-walk sequences for simplicity, curved-walk sequences should be also treated considering situations where a person walks along a curved path or enters a building from a sidewalk. In such cases, person's body sometimes tilts by centrifugal force when walking directions change, and this body tilt considerably degrades gait silhouette and identification performance, especially for widely-used appearance-based approaches. Therefore, we propose a method of body-tilted silhouette correction based on centrifugal force estimation from walking trajectories. Then, gait identification process including gait feature extraction in the frequency domain and learning of a View Transformation Model (VTM) follows the silhouette correction. Experiments of gait identification for circular-walk sequences demonstrate the effectiveness of the proposed method. |

|

|

| Gait Identification using a View Transformation Model in the Frequency Domain |

|

|

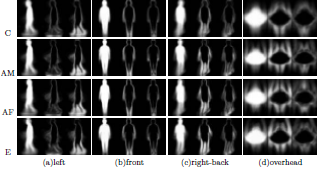

Gait analyses have recently gained attention as methods of individual identification at a distance from a camera. However, appearance changes due to view direction changes cause difficulties for gait identification. We propose a method of gait identification using frequency-domain features and a view transformation model. We first extract frequency-domain features from a spatio-temporal gait silhouette volume. Next, our view transformation model is obtained with a training set of multiple persons from multiple view directions. In an identification phase, the model transforms gallery features into the same view direction as that of an input feature. |

|

|

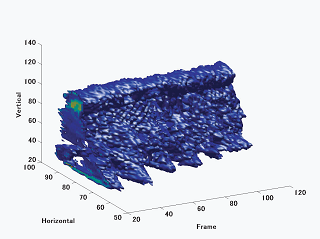

| Spatio-Temporal Analysis of Walking |

|

|

This paper describes a new approach for identifying a person from a spatio-temporal volume that consists of sequential images of a person walking in an arbitrary direction. The proposed approach employs an omnidirectional image sensor and analyzes the three dimensional frequency properties of spatio-temporal volume, because the sensor can capture a long image sequence of a person's movements in all directions, and, as well, their walking patterns have some cycles. Spatio-temporal volume data, here called ``gait volume'', contain information not only of spatial individualities such as features of the torso and face, but also movements of their torso within a unique rhythm. Three dimensional fourier transform is applied to the gait volume to obtain a unique frequency for each person's walking pattern. This paper also evaluates the availability of three dimensional frequency analysis of the gait volume, how much is the difference in frequency patterns while walking. |

|

|