Geometry

3D reconstruction and camera calibration

| Dense 3D reconstruction method using a single pattern for fast moving object |

|

|

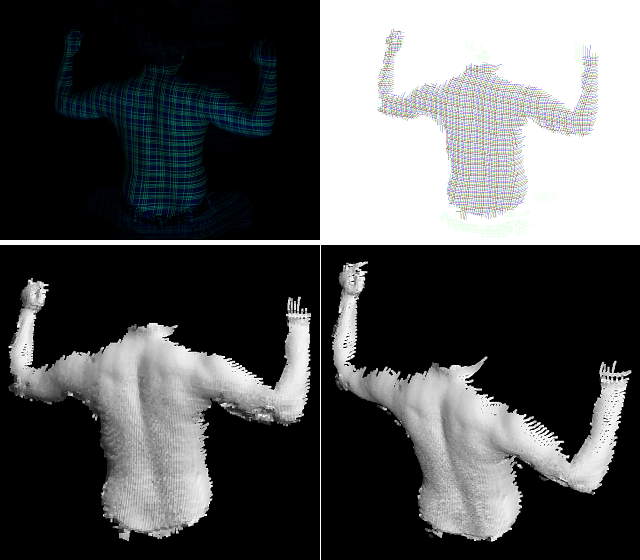

Dense 3D reconstruction of extremely fast moving objects could contribute to various applications such as body structure analysis and accident avoidance and so on. The actual cases for scanning we assume are, for example, acquiring sequential shape at the moment when an object explodes, or observing fast rotating turbine’s blades. In this paper, we propose such a technique based on a one-shot scanning method that reconstructs 3D shape from a single image where dense and simple pattern are projected onto an object. To realize dense 3D reconstruction from a single image, there are several issues to be solved; e.g. instability derived from using multiple colors, and difficulty on detecting dense pattern because of influence of object color and texture compression. This paper describes the solutions of the issues by combining two methods, that is (1) an efficient line detection technique based on de Bruijn sequence and belief propagation, and (2) an extension of shape from intersections of lines method. As a result, a scanning system that can capture an object in fast motion has been actually developed by using a high-speed camera. In the experiments, the proposed method successfully captured the sequence of dense shapes of an exploding balloon, and a breaking ceramic dish at 300-1000 fps. |

|

|

| Simultaneously Capturing Texture and Shape by Projecting Structured Infrared Light |

|

|

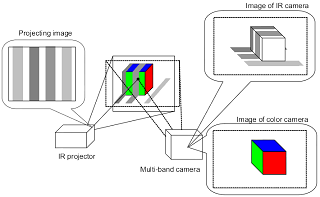

Simultaneous capture of the texture and shape of a moving object in real time is expected to be applicable to various fields including virtual reality and object recognition. Two difficulties must be overcome to develop a sensor able to achieve this feature: fast capturing of shape and the simultaneous capture of texture and shape. One-shot capturing methods based on projecting colored structured lights have already been proposed to obtain shape at a high frame rate. However, since these methods used visible lights, it is impossible to capture texture and shape simultaneously. In this paper, we propose a method that uses projected infrared structured light. Since the proposed method uses visible light for texture and infrared light for shape, simultaneous capturing can be achieved. In addition, a system was developed that maps texture on to the captured shape without occlusion by placing the cameras for visible and infrared lights coaxially. |

|

|

| Registration of Deformable Objects |

|

|

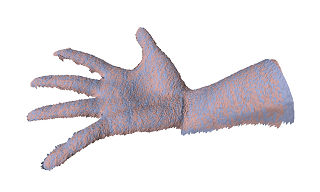

This paper describes a method for registering deformable 3D objects. When an object such as a hand deforms, the deformation of the local shape is small, whereas the global shape deforms to a greater extent in many cases. Therefore, the local shape can be used as a feature for matching corresponding points. Instead of using a descriptor of the local shape, we introduce the convolution of the error between corresponding points for each vertex of a 3D mesh model. This approach is analogous to window matching in 2D image registration. Since the proposed method computes the convolution for every vertex in a model, it incorporates dense feature matching as opposed to sparse matching based on certain feature descriptors. Through experiments, we show that the convolution is useful for finding corresponding points and evaluate the accuracy of the registration. |

|

|

| A Probabilistic Method for Aligning and Merging Range Images with Anisotropic Error Distribution |

|

|

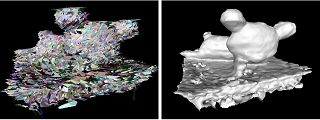

This paper describes a probabilistic method of aligning and merging range images. We formulate these issues as problems of estimating the maximum likelihood. By examining the error distribution of a range finder, we model it as a normal distribution along the line of sight. To align range images, our method estimates the parameters based on the Expectation Maximization (EM) approach. By assuming the error model, the algorithm is implemented as an extension of the Iterative Closest Point (ICP) method. For merging range images, our method computes the signed distances by finding the distances of maximum likelihood. Since our proposed method uses multiple correspondences for each vertex of the range images, errors after aligning and merging range images are less than those of earlier methods that use one-to-one correspondences. Finally, we tested and validated the efficiency of our method by simulation and on real range images. |

|

|

| Real Time 3D Environment Modeling for a Mobile Robot by Aligning Range Image Sequences |

|

|

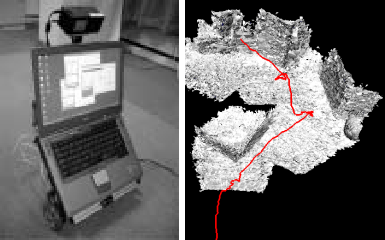

This paper describes real time 3D modeling of the environment for a mobile robot. A real time laser range finder is mounted on the robot, and obtains a range image sequence of the environment while moving around. In this paper, we detail our method that accomplished simultaneous localization and 3D modeling by aligning the acquired range images. The method incrementally aligns range images in real time by a variant of the iterative closest point (ICP) method. By estimating the uncertainty of range measurements, we introduce a new weighting scheme into the aligning framework. In an experiment, we first evaluate the accuracy of the localization results by aligning range images. Second, we show the results of modeling and localization when a robot moves along a meandering path. Finally, we summarize the conditions and limitations required of the robot's motion and the environment for our method to work well. |

|

|

| Narrow-baseline Stereo |

|

|

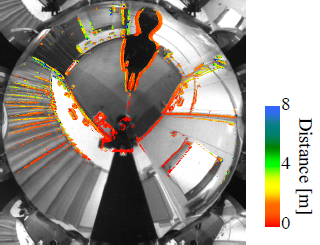

We propose a disparity detection filter which is a novel method to detect disparity for narrow baseline system. Although the method to detect near objects, which was previously proposed, has low computational cost, but it can only determine whether objects are close enough or not. The disparity detection filter can be applied to our omnidirectional sensor and it can detect disparity of objects through hierarchically-smoothed images with various window sizes. This method does not need finding correspondences along epipolar lines and the computational cost is low, because the disparity of each hierarchically-smoothed image is detected by intensity gradient. In addition, the method is better adapted to detect small disparity. Applying the method, we can detect disparity at high speed with narrow base line stereo cameras and our omnidirectional sensor. |

| Robust and Real-Time Egomotion Estimation Using a Compound Omnidirectional Sensor |

|

|

We propose a new egomotion estimation algorithm for a compound omnidirectional camera. Image features are detected by a conventional feature detector and then quickly classified into near and far features by checking infinity on the omnidirectional image of the compound omnidirectional sensor. Egomotion estimation is performed in two steps: first, rotation is recovered using far features; then translation is estimated from near features using the estimated rotation. RANSAC is used for estimations of both rotation and translation. Experiments in various environments show that our approach is robust and provides good accuracy in real-time for large motions. |

|

|

| Accurate Calibration of Intrinsic Camera Parameters by Observing Parallel Light Pairs |

|

|

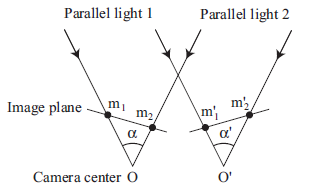

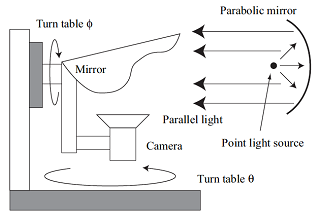

This study describes a method of estimating the intrinsic parameters of a perspective camera. In previous calibration methods for perspective cameras, the intrinsic and extrinsic parameters are estimated simultaneously during calibration. Thus, the intrinsic parameters depend on the estimation of the extrinsic parameters, which is inconsistent with the fact that intrinsic parameters are independent of extrinsic ones. Moreover, in a situation where the extrinsic parameters are not used, only the intrinsic parameters need to be estimated. In this case, an intrinsic parameter, such as focal length, is not sufficiently robust to combat the image processing noise, that is absorbed by both parameter types, during calibration. We therefore propose a new method that allows the estimation of intrinsic parameters without estimating the extrinsic parameters. In order to calibrate the intrinsic parameters, the proposed method observes parallel light pairs that are projected on different points. This is accomplished by applying the constraint that the relative angle of two parallel rays is constant irrespective of where the rays are projected. This method focuses only on intrinsic parameters and the calibrations are sufficiently robust as demonstrated in this study. Moreover, our method can visualize the error of the calibrated result and the degeneracy of the input data. |

|

|

| Mirror Localization for Catadioptric Imaging System by Observing Parallel Light Pairs |

|

|

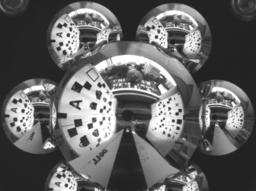

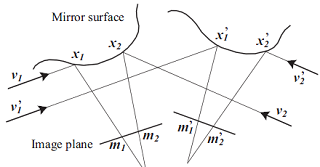

This paper describes a method of mirror localization to calibrate a catadioptric imaging system. While the calibration of a catadioptric system includes the estimation of various parameters, we focus on the localization of the mirror. The proposed method estimates the position of the mirror by observing pairs of parallel lights, which are projected from various directions. Although some earlier methods for calibrating catadioptric systems assume that the system is single viewpoint, which is a strong restriction on the position and shape of the mirror, our method does not restrict the position and shape of the mirror. Since the constraint used by the proposed method is that the relative angle of two parallel lights is constant with respect to the rigid transformation of the imaging system, we can omit both the translation and rotation between the camera and calibration objects from the parameters to be estimated. Therefore, the estimation of the mirror position by the proposed method is independent of the extrinsic parameters of a camera. We compute the error between the model of the mirror and the measurements, and then estimate the position of the mirror by minimizing this error. We test our method using both simulation and real experiments, and evaluate the accuracy thereof. |

|

|

| Mirror Localization for a Catadioptric Imaging System by Projecting Parallel Lights |

|

|

This paper describes a method of mirror localization to calibrate a catadioptric imaging system. Even though the calibration of a catadioptric system includes the estimation of various parameters, in this paper we focus on the localization of the mirror. Since some previously proposed methods assume a single view point system, they have strong restrictions on the position and shape of the mirror. We propose a method that uses parallel lights to simplify the geometry of projection for estimating the position of the mirror, thereby not restricting the position or shape of the mirror. Further, we omit the translation process between the camera and calibration objects from the parameters to be estimated by observing some parallel lights from a different direction. We obtain the constraints on the projection and compute the error between the model of the mirror and the measurements. The position of the mirror is estimated by minimizing the error. We also test our method by simulation and real experiments, and finally we evaluate the accuracy of our method. |

|

|

| Calibration of Lens Distortion by Structured-Light Scanning |

|

|

This paper describes a new method to automatically calibrate lens distortion of wide-angle lenses. We project structured-light patterns using a flat display to generate a map between the display and the image coordinate systems. This approach has two advantages. First, it is easier to take correspondences of image and marker (display) coordinates around the edge of a camera image than using a usual marker, e.g. a checker board. Second, since we can easily construct a dense map, a simple linear interpolation is enough to create an undistorted image. Our method is not restricted by the distortion parameters because it directly generates the map. We have evaluated the accuracy of our method and the error becomes smaller than results by parameter fitting. |

|

|